Improving performance of AI models in presence of artifacts

Our deep learning models have become really good at recognizing hemorrhages from Head CT scans. Real-world performance is sometimes hampered by several external factors both hardware-related and human-related. In this blog post, we analyze how acquisition artifacts are responsible for performance degradation and introduce two methods that we tried, to solve this problem.

Medical Imaging is often accompanied by acquisition artifacts which can be subject related or hardware related. These artifacts make confident diagnostic evaluation difficult in two ways:

- by making abnormalities less obvious visually by overlaying on them.

- by mimicking an abnormality.

Some common examples of artifacts are

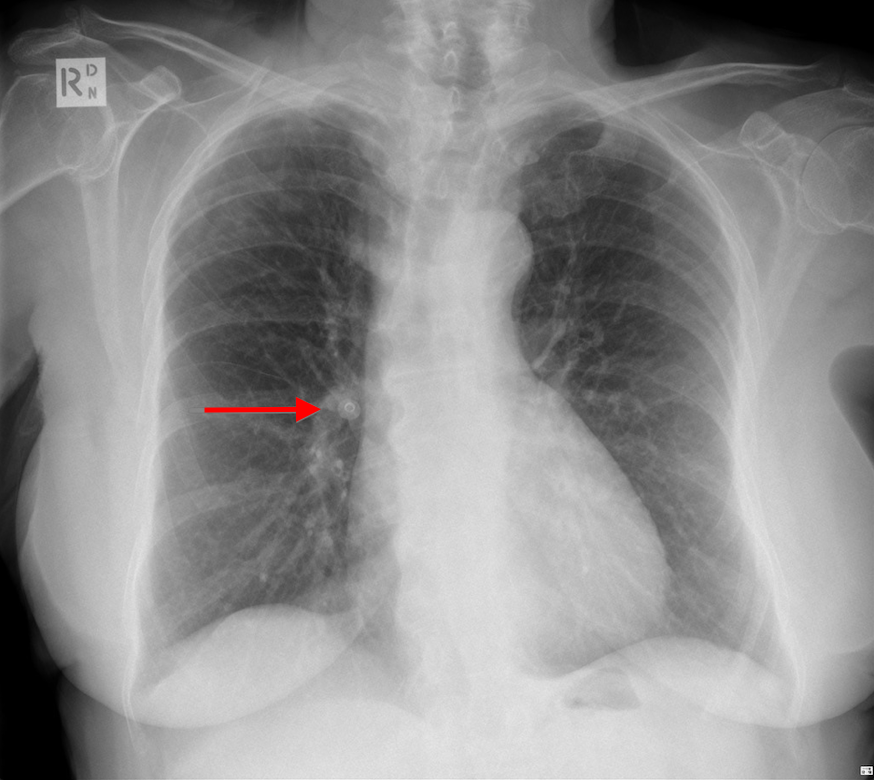

- Clothing artifact- due to clothing on the patient at acquisition time See fig 1 below. Here a button on the patient’s clothing looks like a coin lesion on a Chest X Ray. Marked by red arrow.

Fig 1. A button mimicking coin lesion in Chest X Ray. Marked by red arrow.Source.

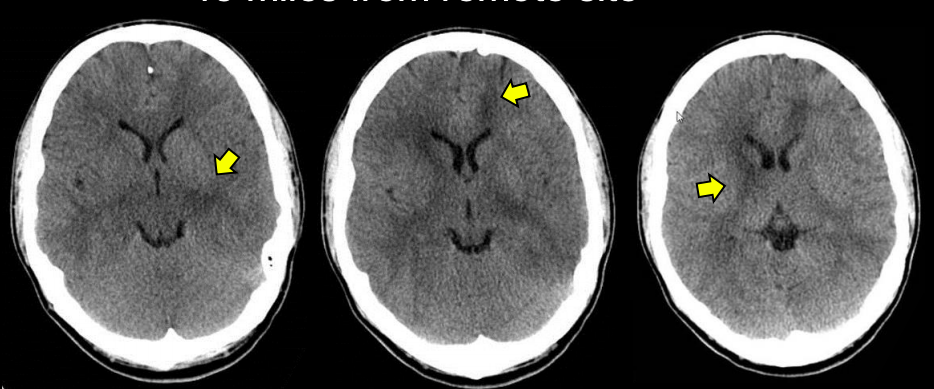

- Motion artifact- due to voluntary or involuntary subject motion during acquisition. Severe motion artifacts due to voluntary motion would usually call for a rescan. Involuntary motion like respiration or cardiac motion, or minimal subject movement could result in artifacts that go undetected and mimic a pathology. See fig 2. Here subject movement has resulted in motion artifacts that mimic subdural hemorrhage(SDH).

Fig 2. Artifact due to subject motion, mimicking a subdural hemorrhage in a Head CT.Source

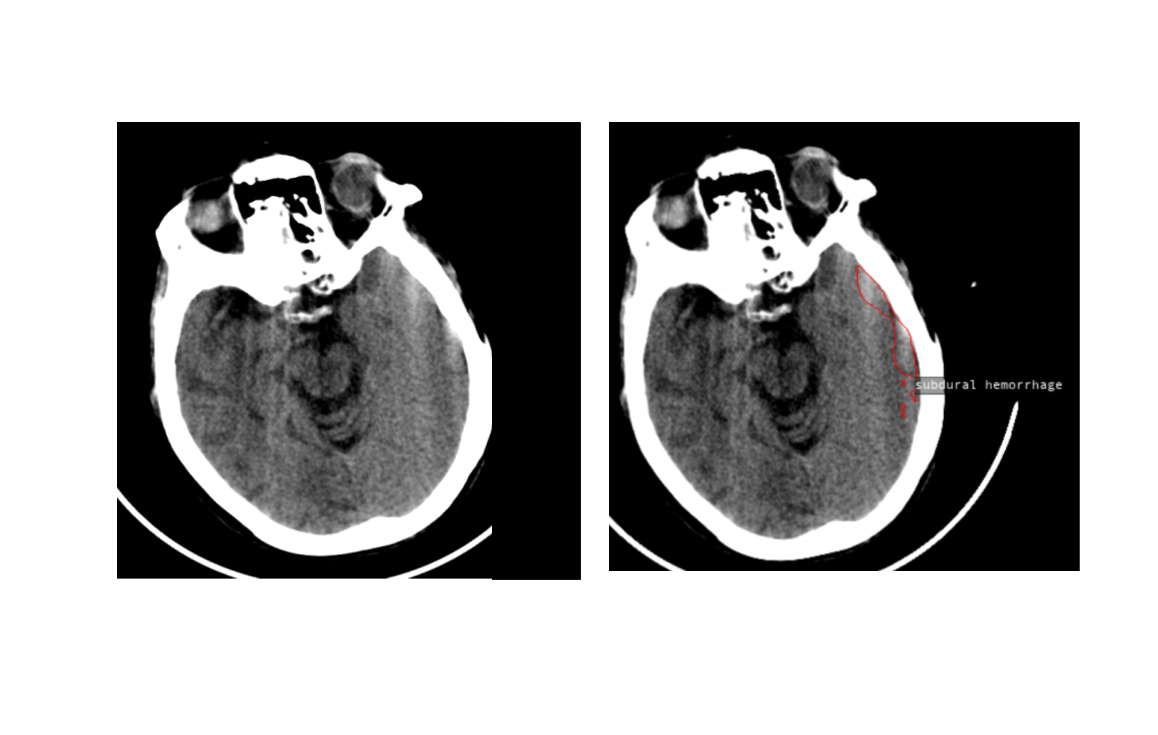

- Hardware artifact- See fig 3. This artifact is caused due to air bubbles in the cooling system. There are subtle irregular dark bands in scan, that can be misidentifed as cerebral edema.

Fig 3. A hardware related artifact, mimicking cerebral edema marked by yellow arrows.Source

Here we are investigating motion artifacts that look like SDH, in Head CT scans. These artifacts result in increase in false positive (FPs) predictions of subdural hemorrhage models. We confirmed this by quantitatively analyzing the FPs of our AI model deployed at an urban outpatient center. The FP rates were higher for this data when compared to our internal test dataset. The reason for these false positive predictions is due to the lack of variety of artifact-ridden data in the training set used. Its practically difficult to acquire and include scans containing all varieties of artifacts in the training set.

Fig 4. Model identifies an artifact slice as SDH because of similarity in shape and location. Both are hyperdense areas close to the cranial bones

We tried to solve this problem in the following two ways.

- Making the models invariant to artifacts, by explicitly including artifact images into our training dataset.

- Discounting slices with artifact when calculating the probability of bleed in a scan.

Method 1. Artifact as an augmentation using Cycle GANs

We reasoned that the artifacts were misclassified as bleeds because the model has not seen enough artifact scans while training. The number of images containing artifacts is relatively small in our annotated training dataset. But we have access to several unannotated scans containing artifacts acquired from various centers with older CT scanners.(Motion artifacts are more prevalent when using older CT scanners with poor in plane temporal resolution). If we could generate artifact ridden versions of all the annotated images in our training dataset, we would be able to effectively augment our training dataset and make the model invariant to artifacts. We decided to use a Cycle GAN to generate new training data containing artifacts.

Cycle GAN[1] is a generative adversarial network that is used for unpaired image to image translation. It serves our purpose because we have an unpaired image translation problem where X domain has our training set CT images with no artifact and Y domain has artifact-ridden CT images.

Fig 5. Cycle GAN was used to convert a short clip of horse into that of a zebra.Source

We curated a A dataset of 5000 images without artifact and B dataset of 4000 images with artifacts and used this to train the Cycle GAN.

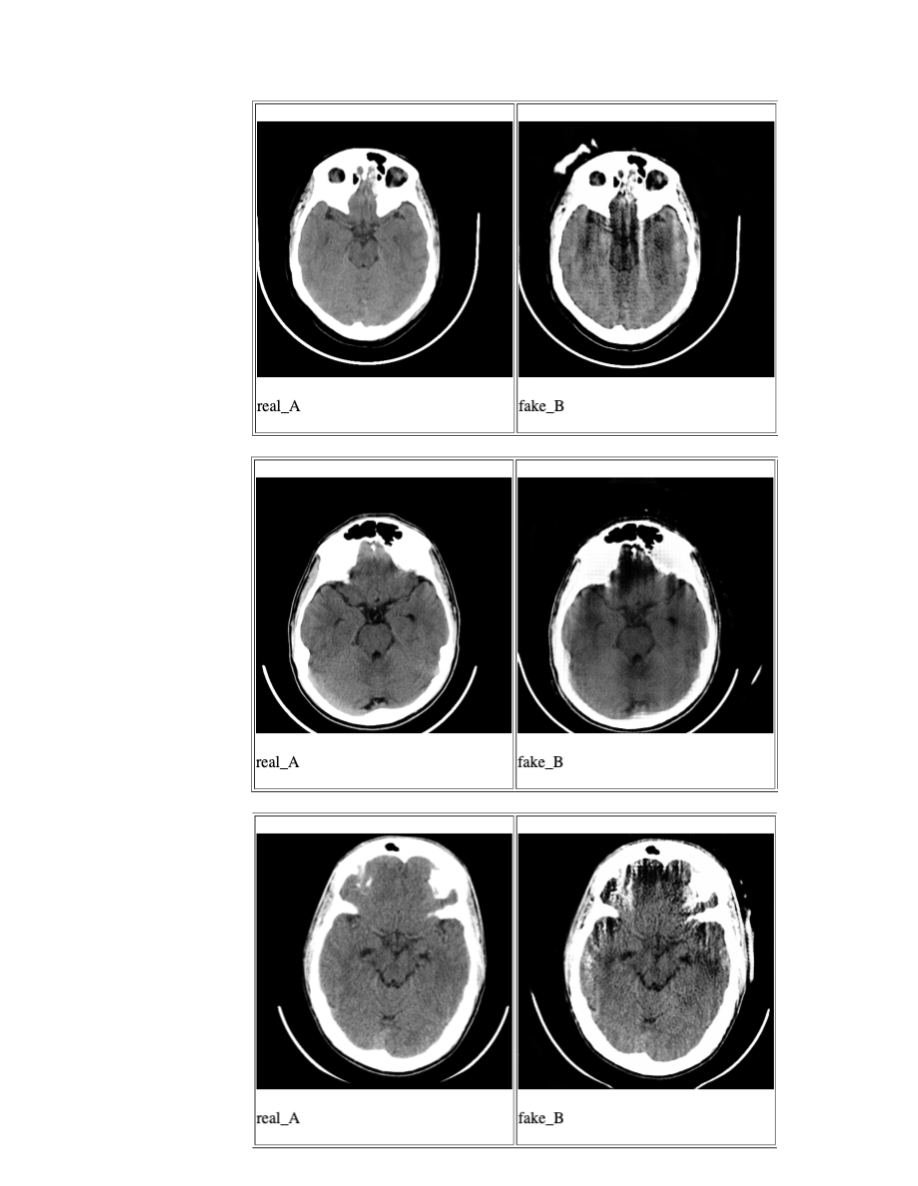

Unfortunately, the quality of generated images was not very good. See fig 6. The generator was unable to capture all the variety in CT dataset, meanwhile introducing artifacts of its own, thus rendering it useless for augmenting the dataset. Cycle GAN authors state that the performance of the generator when the transformation involves geometric changes for ex. dog to cat, apples to oranges etc. is worse when compared to transformation involving color or style changes. Inclusion of artifacts is a bit more complex than color or style changes because it has to introduce distortions to existing geometry. This could be one of the reasons why the generated images have extra artifacts.

Fig 6. Sampling of generated images using Cycle GAN. real_A are input images and fake_B are the artifact_images generated by Cycle GAN.

Method 2. Discounting artifact slices

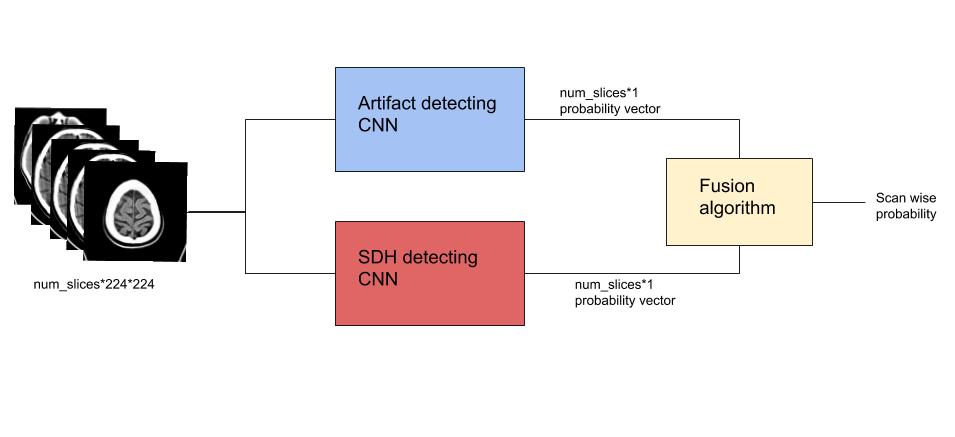

In this method, we trained a model to identify slices with artifacts and show that discounting these slices made the AI model identifying subdural hemorrhage (SDH) robust to artifacts. A manually annotated dataset was used to train a convolutional neural network (CNN) model to detect if a CT slice had artifacts or not. The original SDH model was also a CNN which predicted if a slice contained SDH. The probabilities from artifact model were used to discount the slices containing artifact and artifact-free slices of a scan were used in computation of score for presence of bleed. See fig 7.

Fig 7. Method 2 Using a trained artifacts model to discount artifact slices while calculating SDH probability.

Results

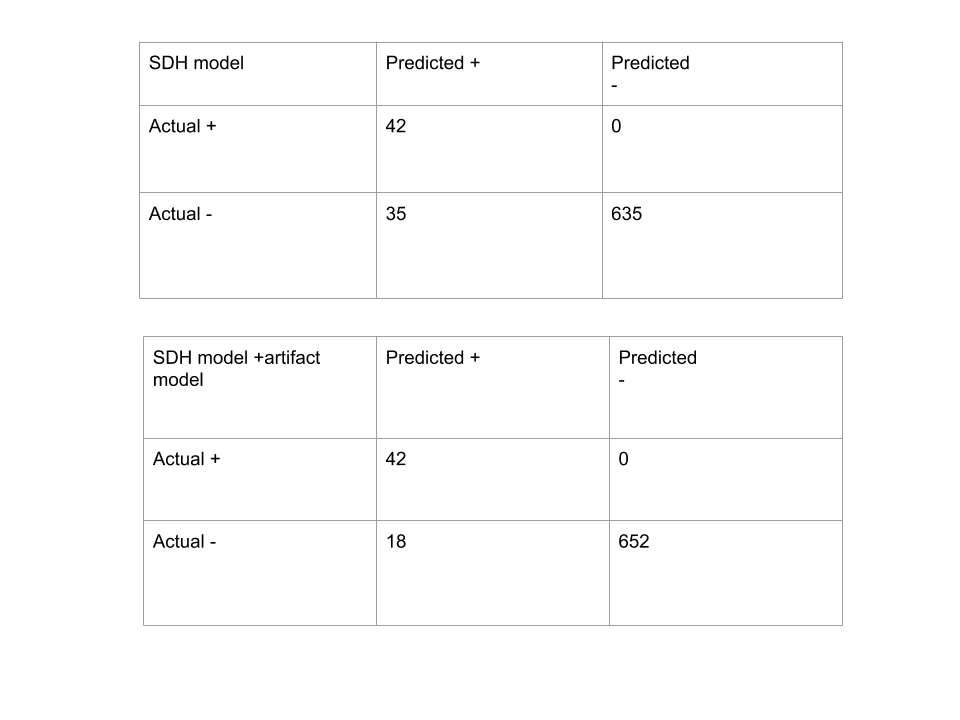

Our validation dataset contained 712 head CT scans, of which 42 contained SDH. Original SDH model predicted 35 false positives and no false negatives. Quantitative analysis of FPs confirmed that 17 (48%) of them were due to CT artifacts. Our trained artifact model had slice-wise AUC of 96%. Proposed modification to the SDH model had reduced the FPs to 18 (decrease of 48%) without introducing any false negatives. Thus using method 2, all scanwise FP’s due to artifacts were corrected.

In summary, using method 2, we improved the precision of SDH detection from 54.5% to 70% while maintaining a sensitivity of 100 percent.

Fig 8. Confusion Matrix before and after using artifact model for SDH prediction

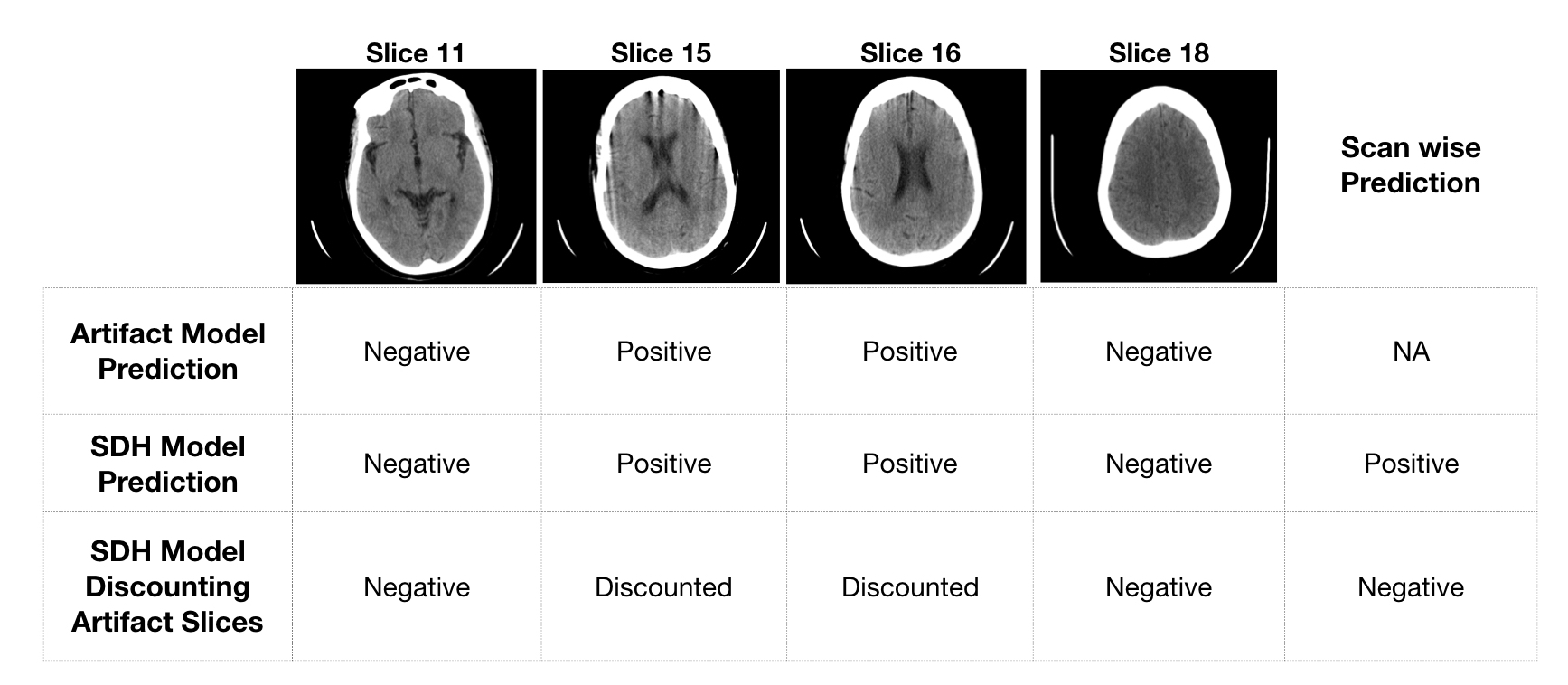

See fig 9. for model predictions on a representative scan.

Fig 9. Model predictions for few representative slices in a scan falsely predicted as positive by original SDH model

A drawback of Method 2 is that if SDH and artifact are present in the same slice, its probable that the SDH could be missed.

Conclusion

Using a cycle GAN to augment the dataset with artifact ridden scans would solve the problem by enriching the dataset with both SDH positive and SDH negative scans with artifacts over top of it. But the current experiments do not give realistic looking image synthesis results. The alternative we used, meanwhile reduces the problem of high false positives due to artifacts while maintaining the same sensitivity.

References

- Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks by Jun-Yan Zhu et al.